Designing custom chatbots is becoming easier. How can we start experimenting with them to support student learning?

Since OpenAI released ChatGPT a little over a year ago, many of us have been exploring the implications of generative AI for education, mostly using general-purpose bots like ChatGPT, Bing, or Google Bard.

As 2024 begins, we’re entering a new phase of experimentation that moves beyond these generic uses of AI to something better suited to support the particular goals of teaching and learning.

Why Course Chatbots?

After watching AI evolve during the last year, we feel strongly that generative AI will not effectively support student learning in the long term unless we can customize it for educational contexts.

Until recently, the most common way to experiment with generative AI was to use a chatbot like ChatGPT that responded to our prompts with whatever data it was trained on. We could customize that experience by adjusting the prompt, and we could share that prompt with students for them to use, but we still had no way to direct the chatbot to reference our content in its responses. This made it difficult to design chatbots that truly felt like they belonged in our courses and were meant to support learning.

When OpenAI released GPTs in the fall of 2023, it suddenly became feasible to build chatbots guided by our customized instructions and using our own content — without needing technical skills. This seemed to offer us a new opportunity to design chatbots with educational purposes in mind.

Creating Our Own

Despite the promise of GTPs, we were soon disappointed to learn that only those with ChatGPT Pro accounts could access them, whether to create chatbots or use them. The only alternative was using OpenAI’s Assistant API (in beta), which required some technical know-how to implement.

Fortunately, the WordPress plugin AI Engine Pro | Meow Apps offered a workaround that would allow us to simulate the experience of a GTP but share it more easily and cheaply. Tim Lindgren used this to develop an inexpensive framework for experimentation that involved creating a WordPress site for each chatbot with a page we could link to from within a Canvas site.

In the Assistant API, we created custom prompts and uploaded any documents we wanted the chatbot to reference in its answers. This provided the building blocks for a lightweight prototype that we could begin to experiment with in a few courses.

The AI Engine plugin also captures records of chatbot discussions so we can monitor how students are using it and make adjustments as the semester progresses.

Project Goals

With a lightweight method of creating chatbots in place, we identified three main goals for our experiments this semester:

Creating a Sandbox for Learning

How does AI actually work, and how can we design effective learning experiences with them? We don’t feel these questions can be answered in the abstract but require using AI in a real course context.

Designing a course chatbot allows us to see how viewing our course through this lens affects the course design process:

- Does it give us new ideas for how to support student learning?

- Does it solve actual problems? If so, which ones and how?

- Where might it get in the way of learning?

But even more importantly, we want to hear from students:

- What do they find helpful or not helpful?

- What ideas do they have for how AI might support their learning?

- How does the chatbot affect the relationship between faculty and students?

We want it to be a conversation starter grounded in actual interfaces and interactions. We also want to create a community of practice in which we design our relationship with these new tools together.

We hope these preliminary experiments will inform the conversations we will need to have as a university community and the decisions we’ll need to make about AI platforms, policies, and professional development.

Providing Context and Curation

We feel strongly that AI will only be a meaningful and effective contribution to learning if professors can use it in ways that align closely with the goals and ethos of the course.

- Personality: Because chatbots give the impression they have personality, faculty need to be able to shape how they relate to students.

- Purpose: Students need to understand why the chatbot is being used in the course and how best to and how to best use it to support their learning.

- Accuracy: Both students and faculty need to be able to trust that the information they are getting is accurate and in line with the course as a whole. We’re interested to see how being able to shape the content that the chatbot references, in turn, shapes how the chatbot behaves in the context of a course and whether it creates a safe place to explore and get help.

Exploring Equity and Access

Finally, we’re interested in the ways AI might assist students who need additional help and increase access to resources for those who need it. Is there potential for ChatBots to enhance equity of access for students from non-traditional/marginalized backgrounds who can use ChatBots to: uncover the hidden syllabus, get content-specific coaching, and create a space to engage with the course on their own terms?

This is very much an open question since new technologies can have unintended consequences and can amplify inequity rather than remedy it, but we’re hoping to understand this question more after seeing how students respond to the tool.

Spring Course Chatbots

Given the short lead time for developing these chatbots, we’re initially focusing our experiments on a few courses taught by CDIL staff so we can provide support and gather as much in-depth feedback as possible.

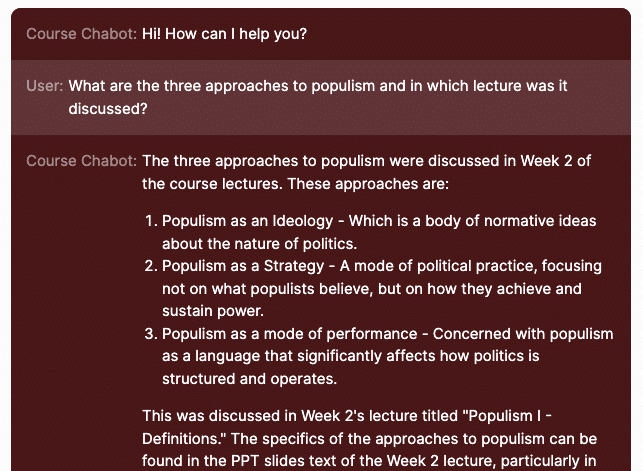

John FitzGibbon, Populism and the Rise of Anti-Democracy

In addition to adding the syllabus and assignment descriptions to the chatbot for his undergraduate political science course, John Fitzgibbon has added two open-access textbooks and the transcripts of his lectures to provide curated resources.

By doing so, he hopes to create a safe space for students to ask basic questions about political science, particularly for the many non-majors who may feel like they are playing catch-up with foundational disciplinary knowledge referenced in the course. By supporting students in gaining a basic toolkit of concepts and key ideas, John hopes to free up energy to engage more deeply with the more complex topics in the course and be able to apply the key ideas more effectively as they explore examples of populism from around the world.

Noël Ingram, First-Year Writing Seminar

Noël Ingram designed her chatbot to act as a guide for students in her First-Year Writing seminar that focuses on multimodal composition. Because the course introduces students to modes of writing and assessment methods they may not be familiar with, it’s crucial that students understand how the course works and be able to ask questions about this structure. She’s interested to see if the chatbot will help to reduce cognitive load for students, especially during key busy (and stressful!) times of the semester. And just maybe, make engaging with syllabus content fun.

She is also using a second chatbot design for another purpose. “Lumi” is a chatbot designed to support students in setting intentions and reflecting on their lives in the context of the course. It’s part of the True North platform and curriculum designed by Belle Liang in the Lynch School of Education.

Kyle Johnson, Perspectives on War, Aggression, and Conflict Resolution

Kyle Johnson wants to provide a chatbot that can answer basic questions students have about course policies, assignments, and schedule. Since Kyle’s course includes some complex multi-step activities with very specific learning outcomes, he also hopes the bot can serve as a coach to help students begin and receive basic feedback to improve their assignments. The bot should act as a coach. Instead of doing work on students’ behalf, it should be conversational and Socratic to help students do their own thinking and working.

To experiment with the best way to prompt a chatbot to function in this way, Kyle wrote a more complex syllabus with information spelled out more didactically than one would typically provide a human reader. [example].

Current Limitations

Streaming: Currently, the Assistant API does not support streaming, which means instead of typing out its response gradually, it presents it all at once. Since we’re used to seeing answers emerge as if the chatbot is typing them out as it thinks, this is not the most intuitive experience for users. We’re hoping that this feature will be added soon.

Speed: The response time may be a little slow since the way it indexes documents for retrieval focuses on user ease of use and may not be as optimized on the back end as other ways of indexing external material.

Scalability: The current pilot is not scalable since it requires stitching together several platforms to create a seamless experience, and it’s not clear yet what the usage costs will be from OpenAI once students have been using it for the semester.

Next Steps

Our goal for this semester is simply to learn as much as we can from how students use the chatbots and from the role they play in the courses overall.

Generative AI tools will likely change substantially even before the term ends, but this gives us a chance to begin learning by trying something concrete with the tools we have available now, with the hope that what we learn will leave us better prepared for whatever is next.

For reflections on what we learned from the pilot see, Helping Chatbots Find Their Purpose.

For information on other resources related to teaching with AI at Boston College, see Engaging with Generative AI.